We will use Excel Professional Plus 2013 to access the refined sentiment data.

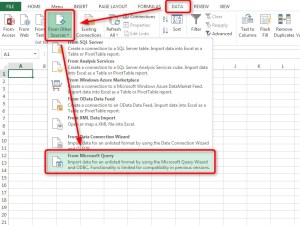

- In Windows, open a new Excel workbook, then select Data > From Other Sources > From Microsoft Query.

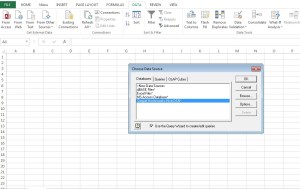

- On the Choose Data Source pop-up, select the Hortonworks ODBC data source you installed previously, then click OK.The Hortonworks ODBC driver enables you to access Hortonworks data with Excel and other Business Intelligence (BI) applications that support ODBC.

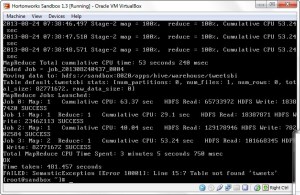

- After the connection to the Sandbox is established, the Query Wizard appears. Select the “tweetsbi” table in the Available tables and columns box, then click the right arrow button to add the entire “tweetsbi” table to the query. Click Next to continue.

- Select the “text” column in the “Columns in your query” box, then click the left arrow button to remove the text column.

- After the “text” column has been removed, click Next to continue.

- On the Filter Data screen, click Next to continue without filtering the data.

- On the Sort Order screen, click Next to continue without setting a sort order.

- Click Finish on the Query Wizard Finish screen to retrieve the query data from the Sandbox and import it into Excel.

- On the Import Data dialog box, click OK to accept the default settings and import the data as a table.

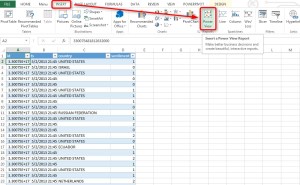

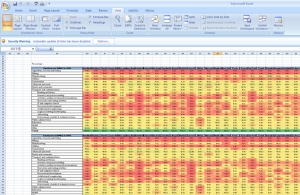

- The imported query data appears in the Excel workbook.

Now that we have successfully imported the Twitter sentiment data into Microsoft Excel, we can use the Excel Power View feature to analyze and visualize the data.

In this section we will see how sentiment varies by country,

- In the Excel worksheet with the imported “tweetsbi” table, select Insert > Power View to open a new Power View report.

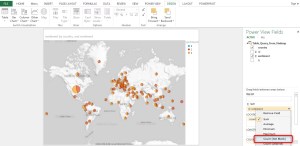

- The Power View Fields area appears on the right side of the window, with the data table displayed on the left. Drag the handles or click the Pop Out icon to maximize the size of the data table.

- In the Power View Fields area, clear the checkboxes next to the id and ts fields, then click Map on the Design tab in the top menu.

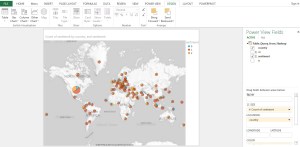

- The map view displays a global view of the data.

- Now let’s display the sentiment data by color. In the Power View Fields area, click sentiment, then selectAdd as Color.

- Under SIZE, click sentiment, then select Count (Not Blank).

- Now the map displays the sentiment data by color:

- Orange: positive

- Blue: negative

- Red: neutral

- Use the map controls to zoom in on Ireland. About half of the tweets have a positive sentiment score, as indicated by the color orange.

- Next, use the map controls to zoom in on the sentiment data in China.

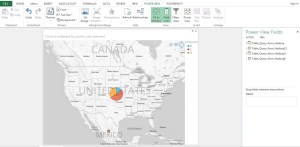

- The United States is the biggest market, so let’s look at sentiment data there. The size of the United States pie chart indicates that a relatively large number of the total tweets come from the US.About half of the tweets in the US show neutral sentiment, with a relatively small amount of negative sentiment.